Amazon ECS

Overview

This service contains Terraform code to deploy a production-grade ECS cluster on AWS using Elastic Container Service (ECS).

This service launches an ECS cluster on top of an Auto Scaling Group that you manage. If you wish to launch an ECS cluster on top of Fargate that is completely managed by AWS, refer to the ecs-fargate-cluster module. Refer to the section EC2 vs Fargate Launch Types for more information on the differences between the two flavors.

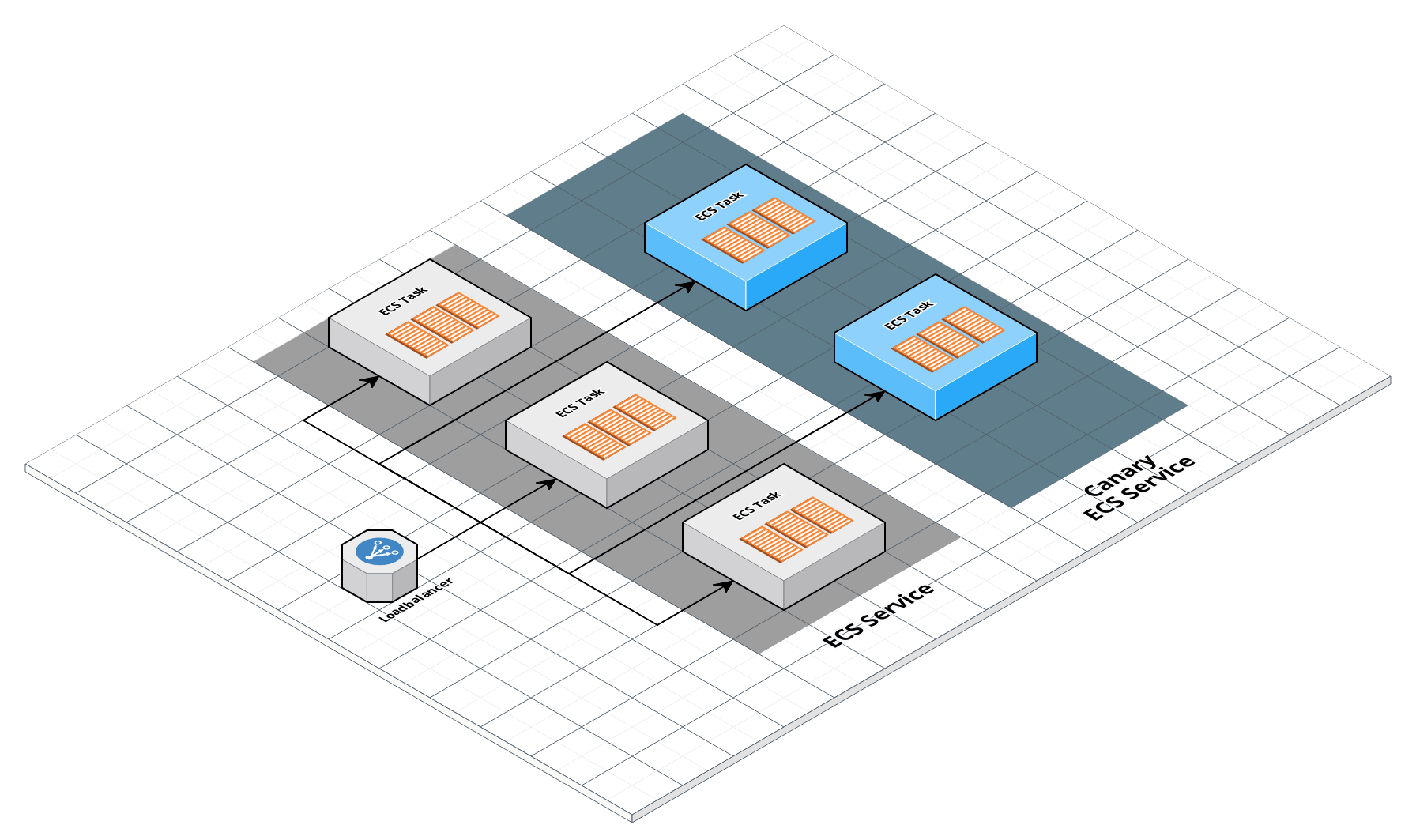

ECS architecture

ECS architecture

Features

This Terraform Module launches an EC2 Container Service Cluster that you can use to run Docker containers. The cluster consists of a configurable number of instances in an Auto Scaling Group (ASG). Each instance:

Runs the ECS Container Agent so it can communicate with the ECS scheduler.

Authenticates with a Docker repo so it can download private images. The Docker repo auth details should be encrypted using Amazon Key Management Service (KMS) and passed in as input variables. The instances, when booting up, will use gruntkms to decrypt the data in-memory. Note that the IAM role for these instances, which uses

var.cluster_nameas its name, must be granted access to the Customer Master Key (CMK) used to encrypt the data.Runs the CloudWatch Logs Agent to send all logs in syslog to CloudWatch Logs. This is configured using the cloudwatch-agent.

Emits custom metrics that are not available by default in CloudWatch, including memory and disk usage. This is configured using the cloudwatch-agent module.

Runs the syslog module to automatically rotate and rate limit syslog so that your instances don’t run out of disk space from large volumes.

Runs the ssh-grunt module so that developers can upload their public SSH keys to IAM and use those SSH keys, along with their IAM user names, to SSH to the ECS Nodes.

Runs the auto-update module so that the ECS nodes install security updates automatically.

Learn

note

This repo is a part of the Gruntwork Service Catalog, a collection of reusable, battle-tested, production ready infrastructure code. If you’ve never used the Service Catalog before, make sure to read How to use the Gruntwork Service Catalog!

Under the hood, this is all implemented using Terraform modules from the Gruntwork terraform-aws-ecs repo. If you are a subscriber and don’t have access to this repo, email support@gruntwork.io.

Core concepts

To understand core concepts like what is ECS, and the different cluster types, see the documentation in the terraform-aws-ecs repo.

To use ECS, you first deploy one or more EC2 Instances into a "cluster". The ECS scheduler can then deploy Docker containers across any of the instances in this cluster. Each instance needs to have the Amazon ECS Agent installed so it can communicate with ECS and register itself as part of the right cluster.

For more info on ECS clusters, including how to run Docker containers in a cluster, how to add additional security group rules, how to handle IAM policies, and more, check out the ecs-cluster documentation in the terraform-aws-ecs repo.

For info on finding your Docker container logs and custom metrics in CloudWatch, check out the cloudwatch-agent documentation.

Repo organization

- modules: the main implementation code for this repo, broken down into multiple standalone, orthogonal submodules.

- examples: This folder contains working examples of how to use the submodules.

- test: Automated tests for the modules and examples.

Deploy

Non-production deployment (quick start for learning)

If you just want to try this repo out for experimenting and learning, check out the following resources:

- examples/for-learning-and-testing folder: The

examples/for-learning-and-testingfolder contains standalone sample code optimized for learning, experimenting, and testing (but not direct production usage).

Production deployment

If you want to deploy this repo in production, check out the following resources:

- examples/for-production folder: The

examples/for-productionfolder contains sample code optimized for direct usage in production. This is code from the Gruntwork Reference Architecture, and it shows you how we build an end-to-end, integrated tech stack on top of the Gruntwork Service Catalog.

Manage

For information on how to configure cluster autoscaling, see How do you configure cluster autoscaling?

For information on how to manage your ECS cluster, see the documentation in the terraform-aws-ecs repo.

Reference

- Inputs

- Outputs

alarms_sns_topic_arn— The ARNs of SNS topics where CloudWatch alarms (e.g., for CPU, memory, and disk space usage) should send notifications

allow_ssh_from_cidr_blocks— The IP address ranges in CIDR format from which to allow incoming SSH requests to the ECS instances.

allow_ssh_from_security_group_ids— The IDs of security groups from which to allow incoming SSH requests to the ECS instances.

autoscaling_termination_protection— Protect EC2 instances running ECS tasks from being terminated due to scale in (spot instances do not support lifecycle modifications). Note that the behavior of termination protection differs between clusters with capacity providers and clusters without. When capacity providers is turned on and this flag is true, only instances that have 0 ECS tasks running will be scaled in, regardless ofcapacity_provider_target. If capacity providers is turned off and this flag is true, this will prevent ANY instances from being scaled in.

capacity_provider_enabled— Enable a capacity provider to autoscale the EC2 ASG created for this ECS cluster.

capacity_provider_max_scale_step— Maximum step adjustment size to the ASG's desired instance count. A number between 1 and 10000.

capacity_provider_min_scale_step— Minimum step adjustment size to the ASG's desired instance count. A number between 1 and 10000.

capacity_provider_target— Target cluster utilization for the ASG capacity provider; a number from 1 to 100. This number influences when scale out happens, and when instances should be scaled in. For example, a setting of 90 means that new instances will be provisioned when all instances are at 90% utilization, while instances that are only 10% utilized (CPU and Memory usage from tasks = 10%) will be scaled in.

cloud_init_parts— Cloud init scripts to run on the ECS cluster instances during boot. See the part blocks inhttps://www.terraform.io/docs/providers/template/d/cloudinit_config.html for syntax

cloudwatch_log_group_kms_key_id— The ID (ARN, alias ARN, AWS ID) of a customer managed KMS Key to use for encrypting log data.

cloudwatch_log_group_name— The name of the log group to create in CloudWatch. Defaults to `var.cluster_name-logs`.

cloudwatch_log_group_retention_in_days— The number of days to retain log events in the log group. Refer tohttps://registry.terraform.io/providers/hashicorp/aws/latest/docs/resources/cloudwatch_log_group#retention_in_daysfor all the valid values. When null, the log events are retained forever.

cloudwatch_log_group_tags— Tags to apply on the CloudWatch Log Group, encoded as a map where the keys are tag keys and values are tag values.

cluster_access_from_sgs— Specify a list of Security Groups that will have access to the ECS cluster. Only used ifenable_cluster_access_portsis set to true

cluster_instance_ami— The AMI to run on each instance in the ECS cluster. You can build the AMI using the Packer template ecs-node-al2.json. One ofcluster_instance_amiorcluster_instance_ami_filtersis required.

cluster_instance_ami_filters— Properties on the AMI that can be used to lookup a prebuilt AMI for use with ECS workers. You can build the AMI using the Packer template ecs-node-al2.json. Only used ifcluster_instance_amiis null. One ofcluster_instance_amiorcluster_instance_ami_filtersis required. Set to null ifcluster_instance_amiis set.

cluster_instance_associate_public_ip_address— Whether to associate a public IP address with an instance in a VPC

cluster_instance_keypair_name— The name of the Key Pair that can be used to SSH to each instance in the ECS cluster

cluster_instance_type— The type of instances to run in the ECS cluster (e.g. t2.medium)

cluster_max_size— The maxiumum number of instances to run in the ECS cluster

cluster_min_size— The minimum number of instances to run in the ECS cluster

cluster_name— The name of the ECS cluster

default_user— The default OS user for the ECS worker AMI. For AWS Amazon Linux AMIs, which is what the Packer template in ecs-node-al2.json uses, the default OS user is 'ec2-user'.

disallowed_availability_zones— A list of availability zones in the region that should be skipped when deploying ECS. You can use this to avoid availability zones that may not be able to provision the resources (e.g instance type does not exist). If empty, allows all availability zones.

enable_cloudwatch_log_aggregation— Set to true to enable Cloudwatch log aggregation for the ECS cluster

enable_cloudwatch_metrics— Set to true to enable Cloudwatch metrics collection for the ECS cluster

enable_cluster_access_ports— Specify a list of ECS Cluster ports which should be accessible from the security groups given incluster_access_from_sgs

enable_ecs_cloudwatch_alarms— Set to true to enable several basic Cloudwatch alarms around CPU usage, memory usage, and disk space usage. If set to true, make sure to specify SNS topics to send notifications to usingalarms_sns_topic_arn

enable_fail2ban— Enable fail2ban to block brute force log in attempts. Defaults to true

enable_ip_lockdown— Enable ip-lockdown to block access to the instance metadata. Defaults to true

enable_ssh_grunt— Set to true to add IAM permissions for ssh-grunt (https://github.com/gruntwork-io/terraform-aws-security/tree/master/modules/ssh-grunt), which will allow you to manage SSH access via IAM groups.

external_account_ssh_grunt_role_arn— Since our IAM users are defined in a separate AWS account, this variable is used to specify the ARN of an IAM role that allows ssh-grunt to retrieve IAM group and public SSH key info from that account.

high_cpu_utilization_evaluation_periods— The number of periods over which data is compared to the specified threshold

high_cpu_utilization_period— The period, in seconds, over which to measure the CPU utilization percentage. Only used ifenable_ecs_cloudwatch_alarmsis set to true

high_cpu_utilization_statistic— The statistic to apply to the alarm's high CPU metric. Either of the following is supported: SampleCount, Average, Sum, Minimum, Maximum

high_cpu_utilization_threshold— Trigger an alarm if the ECS Cluster has a CPU utilization percentage above this threshold. Only used ifenable_ecs_cloudwatch_alarmsis set to true

high_disk_utilization_period— The period, in seconds, over which to measure the disk utilization percentage. Only used ifenable_ecs_cloudwatch_alarmsis set to true

high_disk_utilization_threshold— Trigger an alarm if the EC2 instances in the ECS Cluster have a disk utilization percentage above this threshold. Only used ifenable_ecs_cloudwatch_alarmsis set to true

high_memory_utilization_evaluation_periods— The number of periods over which data is compared to the specified threshold

high_memory_utilization_period— The period, in seconds, over which to measure the memory utilization percentage. Only used ifenable_ecs_cloudwatch_alarmsis set to true

high_memory_utilization_statistic— The statistic to apply to the alarm's high CPU metric. Either of the following is supported: SampleCount, Average, Sum, Minimum, Maximum

high_memory_utilization_threshold— Trigger an alarm if the ECS Cluster has a memory utilization percentage above this threshold. Only used ifenable_ecs_cloudwatch_alarmsis set to true

internal_alb_sg_ids— The Security Group ID for the internal ALB

multi_az_capacity_provider— Enable a multi-az capacity provider to autoscale the EC2 ASGs created for this ECS cluster, only ifcapacity_provider_enabled= true

public_alb_sg_ids— The Security Group ID for the public ALB

should_create_cloudwatch_log_group— When true, precreate the CloudWatch Log Group to use for log aggregation from the EC2 instances. This is useful if you wish to customize the CloudWatch Log Group with various settings such as retention periods and KMS encryption. When false, the CloudWatch agent will automatically create a basic log group to use.

ssh_grunt_iam_group— If you are using ssh-grunt, this is the name of the IAM group from which users will be allowed to SSH to the nodes in this ECS cluster. This value is only used ifenable_ssh_grunt=true.

ssh_grunt_iam_group_sudo— If you are using ssh-grunt, this is the name of the IAM group from which users will be allowed to SSH to the nodes in this ECS cluster with sudo permissions. This value is only used ifenable_ssh_grunt=true.

tenancy— The tenancy of this server. Must be one of: default, dedicated, or host.

vpc_id— The ID of the VPC in which the ECS cluster should be launched

vpc_subnet_ids— The IDs of the subnets in which to deploy the ECS cluster instances

all_metric_widgets— A list of all the CloudWatch Dashboard metric widgets available in this module.

ecs_cluster_arn— The ID of the ECS cluster

ecs_cluster_asg_name— The name of the ECS cluster's autoscaling group (ASG)

ecs_cluster_asg_names— For configurations with multiple ASGs, this contains a list of ASG names.

ecs_cluster_capacity_provider_names— For configurations with multiple capacity providers, this contains a list of all capacity provider names.

ecs_cluster_launch_configuration_id— The ID of the launch configuration used by the ECS cluster's auto scaling group (ASG)

ecs_cluster_name— The name of the ECS cluster

ecs_cluster_vpc_id— The ID of the VPC into which the ECS cluster is launched

ecs_cluster_vpc_subnet_ids— The VPC subnet IDs into which the ECS cluster can launch resources into

ecs_instance_iam_role_arn— The ARN of the IAM role applied to ECS instances

ecs_instance_iam_role_id— The ID of the IAM role applied to ECS instances

ecs_instance_iam_role_name— The name of the IAM role applied to ECS instances

ecs_instance_security_group_id— The ID of the security group applied to ECS instances

metric_widget_ecs_cluster_cpu_usage— The CloudWatch Dashboard metric widget for the ECS cluster workers' CPU utilization metric.

metric_widget_ecs_cluster_memory_usage— The CloudWatch Dashboard metric widget for the ECS cluster workers' Memory utilization metric.